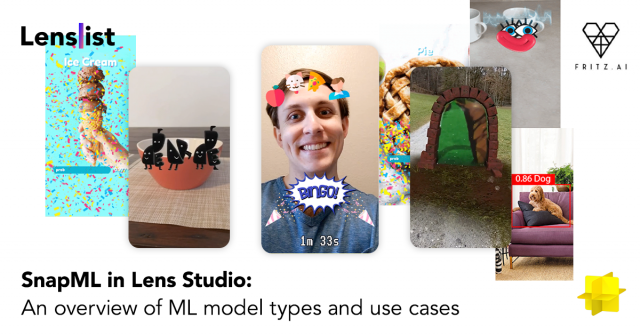

SnapML in Lens Studio: An Overview of ML Model Types and Use Cases

As promised, we come to you with the second part of our SnapML x Fritz AI article, this time with a guest post from Austin from the aforementioned Fritz AI. Austin will tell you all about possible use cases for utilizing their software to create many types of ML-enabled, powerful AR experiences. Without further ado, let’s hear it from Austin! 👇

Machine learning unlocks a wide range of possibilities when it comes to AR creation, and the two technologies can be quite complementary—nobody in the industry has proved that to be true more than Snapchat, whose immersive AR Lenses work so well in large part because of the complex ML models running underneath them (things like face tracking, background segmentation, and more).

With SnapML, though, Snapchat has opened up this expansive capability to its community of Lens Creators, allowing them to implement custom machine learning models in their Lenses. This new set of capabilities quite literally extends what Creators can do with Lens Studio, allowing them to bring in completely custom ML models.

Sounds great, right?

But the truth is, machine learning isn’t always easy to understand conceptually, much less actually implement in Lenses. In this blog post, I want to try to demystify at least one part of this equation—what’s actually creatively possible with SnapML in Lens Creation.

Image Classification

Also commonly referred to as image labeling and image recognition, image classification is a computer vision task that simply predicts what is seen within an image or video frame.

Often, that prediction is mapped to a text label that can either be shown to end users or trigger a global AR effect. For example, you might have a classification model that can see and recognize a variety of different dessert food items, as shown in the gif below:

Notice that the confetti effect above isn’t targeted or localized within the camera scene—instead, the prediction triggers an effect that isn’t directly attached to the object being recognized.

If the AR effect you’d like to implement doesn’t need to be connected directly to the component being classified (in this case, the range of desserts themselves), then a classification model should work well.

Sample Image Classification Lenses:

by Cinehackers

by Maxime C

by Luka Lan Gabriel

by Mike

Object Detection

If you want to anchor an AR effect to a target object as it moves through a scene, then image classification won’t quite do the trick. Object detection takes things a step further, locating, counting, and tracking instances of each target class and providing 4 “bounding box” coordinates—essentially a rectangle outlining the detected object.

We can see how this works in practice, with a Mug Detection model in this Lens from Creator JP Pirie:

As you can see in the example above, because object detection allows us to locate each individual instance of targeted objects in images or across video frames, AR effects can become much more connected to those objects.

This kind of model could also be especially effective for Lenses that need to anchor AR effects to brand logos, product packaging, or other unique objects that you want users to engage with directly.

Sample Object Detection Lenses:

by jp pirie

by jp pirie

by Ger Killeen

by Atit Kharel

Segmentation

Segmentation models predict pixel-level masks for green-screen-like effects or photo composites. Segmentation can work on just about anything: a person’s hair, the sky, a park bench — whatever you can dream up.

Segmentation is a big part of the ML that’s already baked into Lens Studio, with the primary portrait segmentation template serving as a starting point for some really immersive experiences. But SnapML unlocks this powerful tasks for all kinds of other objects and scene elements.

So if you want your AR effects to track closely to an object, or augment entire parts (i.e. segments) of a scene like the floor or walls, then segmentation is the right machine learning task for you.

For instance, in this Lens from Creator Ryan Shields, you can see that both the fire and iice shaders are applied only to the segmentation mask of the evergreen tree.

Segmentation, thus, takes us another step further when it comes to scene understanding. Boundaries between objects or scene elements become more clear, and the opportunities to augment and manipulate those boundaries become more fine-grained.

Sample Segmentation Lenses:

by RYΛN SHIELDS

by jp pirie

Style Transfer

Style transfer is a computer vision task that allows us to take the style of one image and apply its visual patterns to the content of another image/video stream.

For Lens Creators, it’s also the easiest ML task to get started with, as you don’t need a full dataset of images—just one good style image that the model will learn visual patterns from.

On their own, style transfer models make for interesting artistic expressions, and with SnapML, it’s never been easier to get those creative Lenses in front of the world. Additionally, Creators can leverage Style Transfer in different ways—with other ML tasks like segmentation, as the effect on the other side of a portal (as shown in the gif below), and much more.

For best practices in choosing style images to train these models on, check out our quick guide, which includes some tips and tricks as well as a variety of sample styles.

Sample Style Transfer Lenses

by Luka Lan Gabriel

by Cinehackers

by Jonah Cohn

by tyleeseeuh

Wrapping Up

While these are the core computer vision-based ML tasks that can power SnapML projects in Lens Studio, the sample use cases presented here only scratch the surface of what’s possible with this new set of capabilities.

What’s more, getting started with SnapML in Lens Studio is getting easier all the time—at Fritz AI, we have a collection of ready-to-use pre-trained SnapML projects, as well as an end-to-end, no-code platform for training style transfer and custom ML models from scratch.

Fritz AI for SnapML is currently in beta and free-to-use—we’re excited to see what you’ll create with SnapML in Lens Studio.

For more explainers, tutorials, Creator spotlights, and more, check out our collected resources on our blog, Heartbeat.

Author

Austin Kodra is the Head of Content and Community at Fritz AI, the machine learning platform for SnapML/Lens Studio. He’s also the Editor-in-Chief of Heartbeat, a contributor-driven publication that explores the intersections of AI, AR, and mobile.

Austin Kodra is the Head of Content and Community at Fritz AI, the machine learning platform for SnapML/Lens Studio. He’s also the Editor-in-Chief of Heartbeat, a contributor-driven publication that explores the intersections of AI, AR, and mobile.