Snapchat Introduces 2D Full Body Tracking

FINALLY! A feature the whole AR Creators Community has been waiting for, came to us with the newest update of Lens Studio (3.1) – 2D Full Body Tracking is here and… we can’t be more here for it!

Snapchat came in with this update in a perfect moment – when (thanks to TikTok) rear-view videos of dances and sketches became ultra popular and not only creators, but also brands and marketers noticed the trend and opportunities it brings to the social media AR table.

As a part of the promotional campaign for this recent update, Snapchat brought to us four pairings made of Official Lens Creators and Snap Stars to show the capabilities of 2D FBT. We decided to investigate and gather some insights from the Snap creators themselves, to find out how this feature can and will be used by the community. Check out their Lenses and comments below!

Be Happy by Dixie D’Amelio and Abbas Sajad

From Official Lens Creator Abbas Sajad: “I built a Lens to complement Dixie D’Amelio’s new song “Be Happy.” The song is a reminder that you’re in control of your own happiness, so we designed the Lens so that your movements control AR weather effects, which reflect different kinds of feelings. For example, when you’re feeling down, you relate more to rain and dark clouds, while happiness feels more like a sunny day. So when you use the Lens and throw your hands in the air, the rain and dark clouds disperse, bringing in the bright sun. To keep the Lens natural, I drew all these effect animations by hand, frame by frame. It was great to collaborate with Dixie and we’re happy with the final Lens, so I can’t wait for people to try it!”

Star Burst by Jalaiah Harmon and Jye Trudinger

From Official Lens Creator Jye Trudinger: “Jalaiah liked the concept of a Lens that followed and amplified your movements – so I took this concept and ran with it. We really wanted the colors to pop and be super vibrant, so we designed it to create a rainbow aura to emphasize your movements and grab the attention of someone watching this Lens in use. We also built the Lens to amplify any sudden stops in movement, so you can dance and pose to trigger the AR effect of a white silhouette into a colorful burst. It was awesome to collaborate with Jalaiah to share new ideas to elevate the quality of the Lens, and it was amazing to see the Lens come to life through her dance style.”

Alone by Loren Grey and Max van Leeuwen

From Official Lens Creator Max van Leeuwen: For the Snaplens I made with Loren Gray it was important to match her style/aesthetic, especially since it also features her music in it. The hand-drawn animated doodles I used were a nice blend between cute and minimalistic, so I used that as the basis. I wanted the Snaplens to only be a subtle addition, so the Lens would be more versatile and you could use it even if you weren’t playing the music in the background. It shouldn’t take over the whole screen. The frame rate of the animated doodles is lower, making it feel a bit like stop-motion on paper instead of a virtual element. I also added a small but strong film grain and a custom color post effect to make the image warmer and more organic.

Be you by Sarati and Robin Delaporte

How was the collaboration between you and the Snap Star? Did you come up with the idea together or have you simply drawn inspiration from what they do?

Abbas Sajad: I had a lot of fun working on this project with Dixie. She wanted to make sure that the user felt that they could be in control of the Lens, and it had to mirror the aesthetic of her ‘Be Happy’ music video. So I took the initial seedling of the idea and kept expanding on it to bring it to life in a Lens.

Abbas Sajad: I had a lot of fun working on this project with Dixie. She wanted to make sure that the user felt that they could be in control of the Lens, and it had to mirror the aesthetic of her ‘Be Happy’ music video. So I took the initial seedling of the idea and kept expanding on it to bring it to life in a Lens.

Jye Trudinger: The Lens was originally inspired by one of my existing Lenses: Trails. Jalaiah liked the concept of a Lens that followed and amplified your movements – so I took this concept and ran with it.

Jye Trudinger: The Lens was originally inspired by one of my existing Lenses: Trails. Jalaiah liked the concept of a Lens that followed and amplified your movements – so I took this concept and ran with it.

Which aspect of the 2D Full Body Tracking is more exciting and useful for you – triggers or attachments? Why?

Abbas Sajad: They’re both exciting features with limitless potential, and I can’t wait to use them more in the future. However, I find myself drawn more to triggers. I feel it adds an elemental of interactivity between my animations and the user, and I can’t wait to use it more when it comes to designing game Lenses.

Abbas Sajad: They’re both exciting features with limitless potential, and I can’t wait to use them more in the future. However, I find myself drawn more to triggers. I feel it adds an elemental of interactivity between my animations and the user, and I can’t wait to use it more when it comes to designing game Lenses.

Jye Trudinger: I think they’re both very useful features – for me the triggers are the most interesting as it adds another level at which the user can interact with a Lens, and opens up to a lot of different controls and unique interactions.

Jye Trudinger: I think they’re both very useful features – for me the triggers are the most interesting as it adds another level at which the user can interact with a Lens, and opens up to a lot of different controls and unique interactions.

How do you think brands (and what kind of brands) and other creators can use this feature? Could you give us some examples of use cases for 2D Full Body Tracking?

Abbas Sajad: I can see all types of brands using these new features. For a while, AR on social media tended to have a focus on the user’s face, but there’s only so much you can do there. With body tracking, you’re incorporating full-body movement and expression. For example, a fitness brand might want to create a workout-related game that utilizes these new features. Whereas before you were limited to certain parts of the body.

Abbas Sajad: I can see all types of brands using these new features. For a while, AR on social media tended to have a focus on the user’s face, but there’s only so much you can do there. With body tracking, you’re incorporating full-body movement and expression. For example, a fitness brand might want to create a workout-related game that utilizes these new features. Whereas before you were limited to certain parts of the body.

Jye Trudinger: I can definitely see brands selling fashion/outerwear using these features to promote their products – especially if the 2D tracking moves into 3D.

Jye Trudinger: I can definitely see brands selling fashion/outerwear using these features to promote their products – especially if the 2D tracking moves into 3D.

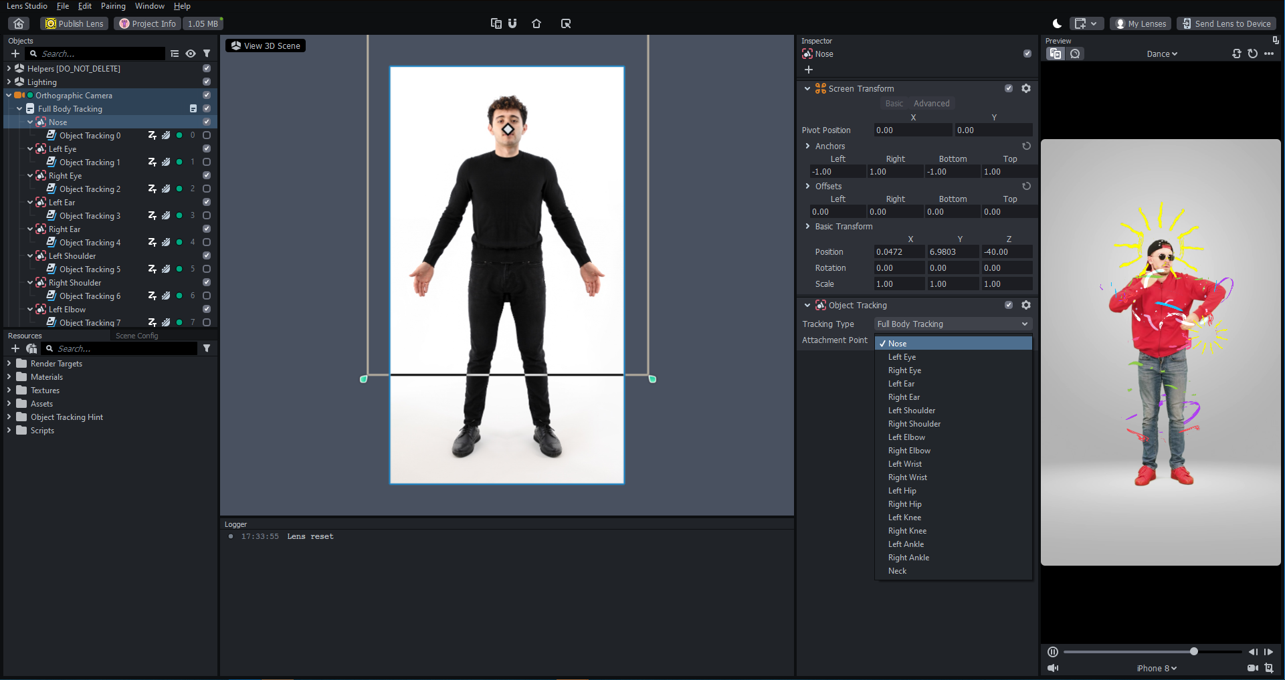

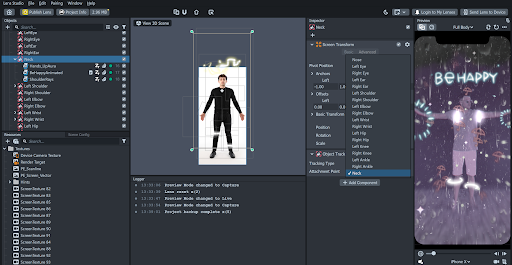

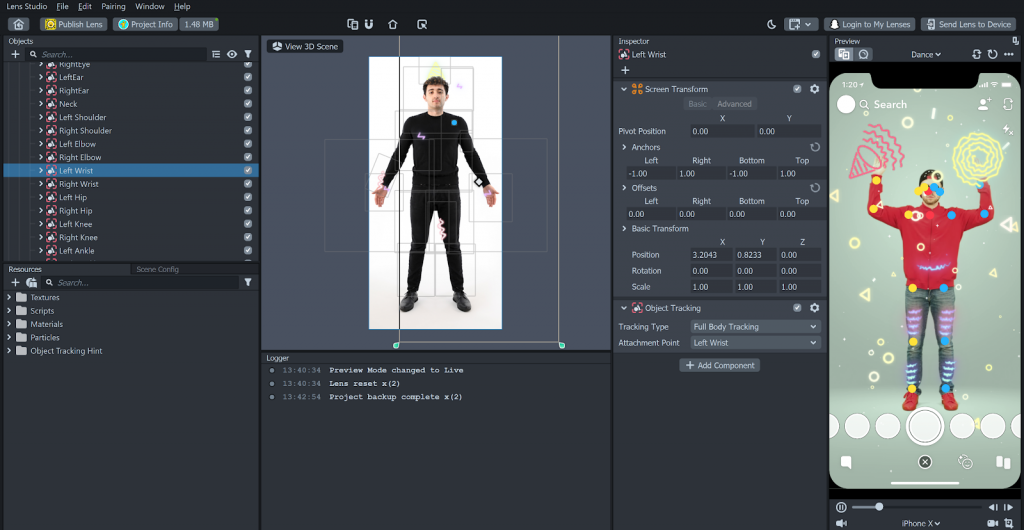

Lens Studio 3.1 allows to track 18 joints and trigger effects based on those body movements. Can you share with us some screenshots from the LS, where we can see how one can pick the specific joints to track?

Abbas Sajad: Here are some screenshots from inside of Lens studio. One is from the Be Happy Lens, and the other is from the Full Body Trigger template showing the different joints. (see attachments)

Abbas Sajad: Here are some screenshots from inside of Lens studio. One is from the Be Happy Lens, and the other is from the Full Body Trigger template showing the different joints. (see attachments)

Jye Trudinger: The Full body tracking attachments template lays out all the tracking points with sample sprites in the scene already – however on the object tracking component you are able to select which point to attach a sprite/image to:

Jye Trudinger: The Full body tracking attachments template lays out all the tracking points with sample sprites in the scene already – however on the object tracking component you are able to select which point to attach a sprite/image to:

Ever since the world of AR became open and democratized in 2017, Full Body Tracking was one of the most anticipated features among the creators community. How do you think it will affect your future projects and influence the way people are using and perceiving social media AR?

Abbas Sajad: I’m incredibly excited to include the whole body into more of my future projects. AR for social media has progressed so much in the past few years. One thing I can’t wait to see is the amount of game’s that will come from these features and the way that creators will push it to its limits. In the past, we’ve seen all types of AR effects start viral trends. I can see the same thing happening with these new features and dynamically changing content creation on social media.)

Abbas Sajad: I’m incredibly excited to include the whole body into more of my future projects. AR for social media has progressed so much in the past few years. One thing I can’t wait to see is the amount of game’s that will come from these features and the way that creators will push it to its limits. In the past, we’ve seen all types of AR effects start viral trends. I can see the same thing happening with these new features and dynamically changing content creation on social media.)

Jye Trudinger: I haven’t personally thought of many ways to use the 2D body tracking just yet, however it’s only a matter of time before myself and others create some amazing Lenses using this technology. I’ve already seen a really awesome Lens by another official Lens creator mkcola that appears to use the body tracking and segmentation to attach butterfly wings behind the user. I’m sure it’s only a matter of time before some brands see this new technology and come up with a way to integrate their product advertisement into a Lens that uses these features. I also think it encourages people to use filters for more than just their face – and to take the time to set their phone up in a position that will show more than just their face and interact with a Lens in that way.

Jye Trudinger: I haven’t personally thought of many ways to use the 2D body tracking just yet, however it’s only a matter of time before myself and others create some amazing Lenses using this technology. I’ve already seen a really awesome Lens by another official Lens creator mkcola that appears to use the body tracking and segmentation to attach butterfly wings behind the user. I’m sure it’s only a matter of time before some brands see this new technology and come up with a way to integrate their product advertisement into a Lens that uses these features. I also think it encourages people to use filters for more than just their face – and to take the time to set their phone up in a position that will show more than just their face and interact with a Lens in that way.