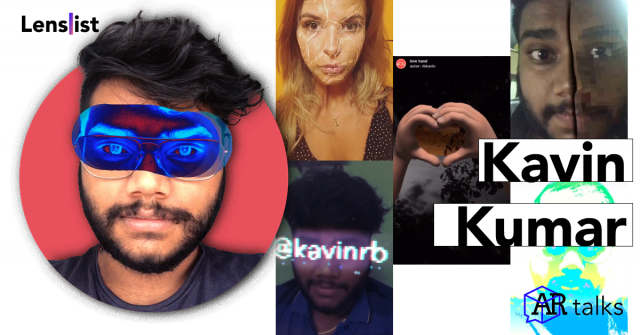

AR Talk With Kavin Kumar – Multiplatform AR Creator

Kavin Kumar is one of the most prolific AR Creators from India, who works both in Lens Studio and Spark AR. We’ve been observing his work for quite some time now and what’s interesting about his portfolio is how versatile it is – he keeps surprising us with utilizing technologies like Machine Learning or coming up with more and more advanced 3D models. He also runs a YouTube Channel with tutorials, helping the AR Community to learn and grow.

Hi Kavin! Thanks for taking the time to answer our questions 🙂 First, please tell us more about yourself and your AR journey so far.

I’m a full time social media AR developer, who makes filters for Snapchat and Instagram. I started using Spark AR as a hobby back in 2018 and made it a full time job in 2019.

I am a computer science graduate and was looking into AR and VR and found this. I started to like this field as it involves shaders, graphic design and more creative stuff. So I started designing 3D models for my own filters and I liked the process, so I decided to continue and since then I keep experimenting with 3D, 2D and shader based projects.

You seem to be a part of a pretty small group of Creators, who are equally prolific in designing filters both in Spark AR and Lens Studio. Could you tell us more about that and what do you consider the most important differences between these two platforms?

As I’ve mentioned before, I started to design in Spark AR in 2018 and then I tried Lens Studio around December 2019 and now I’m an Official Lens Creator for Snapchat . As part of exploring I found Lens Studio to have more advanced features compared to Spark in some areas. The thing I feel is the big difference is that Spark AR has the patch editor that we can use for both logic and shaders, whereas in Lens Studio, it’s more like a game engine – all the features in it are the same.

They now have node based solutions, separate for logic and for material. I still prefer the script part of Lens Studio as I feel it has an extra advantage. But both platforms have their own advantages, different approaches and what I like about designing in both is that they don’t try to make it the same thing.

Your AR tutorials have been watched thousands of times on YouTube – why did you decide to start the channel and what are you focusing on in your tutorials? Are they suitable more for beginners or more advanced AR Creators? (please give us a link to the video you want to see in the article :))

I started to make tutorials, because I saw that often many people were facing the same problems. As most of the people in the AR community are designers and not coders, it can be difficult for them to understand issues involving code. So I decided to come up with tutorials that could help people understand certain complex topics like Render Pass in Spark AR, which is a difficult subject, so I tried to explain it to both the beginners and advanced Creators.

Similarly the Dynamic Instancing can be only done with code for now so I made a tutorial for people, who don’t have that much experience in it. My goal here is to solve common issues people face that are easy to implement with code.

Please pick two of your favourite filters – one you made for Snapchat and one for Instagram – and take us through the making-of process. (please include screenshots or videos if you have some :))

One of my favorite Lenses for Snap is the love hand, which was my first ever fully built filter I made for both platforms, where I made the hands and the animation from scratch. It’s basically two hands coming from the ground and forming a heart sign and only if we see through that space between the hands, we can see the world in color, the rest of the screen is all black and white. It was a fun process as I had to figure out how to animate and make decent hands models, so I learned a lot in the process .

My favorite one for Instagram is Ghost in the Book. This was a collaborative project I did with Don Allen (what up Don!), where he made the book and I made the rest of the design. It’s basically a book you can place on a surface and if you tap on it, a ghost will come out of the book and when you come closer to it, it will attack you and if you stay away, it will try to escape.

In my intro I’ve mentioned how versatile your projects are – can you tell us more about your inspirations? From where do you get your ideas and what are your favorite filters to make?

As I don’t stick to any certain types of filters I tend to get ideas from everywhere. Sometimes I get inspired when I watch movies or a cool TV series or sometimes when I see other artworks. Like for the love hand Lens I was taught of making some experiences, where users can see what love can do in their life. So I made the world black and white and only inside the heart the world is full of colors. Also for my recent Lens called Proposal I just thought of making a virtual proposal for singles out there. My inspiration is mostly the things I like to see in the real world. I feel AR is a way to bring our own world into reality and also a tool to modify the reality I live in. So to answer where I get my inspiration, I make things I want to add to this reality.

Last year Lens Studio introduced many interesting, new features like Full Body Tracking, SnapML or LiDAR-powered Lenses. Which of these features are you most excited about and what are your plans for the future projects – do they include any of the aforementioned features?

So I’m more excited about all the features you mentioned, but LiDAR is the one that I believe can bring the most fun to the end user, as it gives us a way to make the virtual elements interact with the real world. LiDAR also enables Creators to make things that are going to look more real than ever before. For example, think of a game, where we can grow and care for a pet in a virtual world and it goes all over your room and hides behind chairs and tables in your home. It can help us close a huge gap between reality and virtual elements. I’m also excited for updates that will empower Creators to come up with our own features, so we don’t have to wait for others to make it.

What are you expecting to happen with social media AR in 2021? Are you anticipating any new, groundbreaking features or fixes that will make the creation process easier or faster or maybe different ways of distributing and monetizing AR filters?

I think that with the rate that AR is growing, I feel the Plane Tracking will soon become more realistic, so that we can track it so perfectly that we can’t see the difference between real world elements and the virtual ones. I’m also looking forward to seeing more Creators monetizing their filters and of course more cool features that can access both the front and back cameras so we can make more proper lighting for our 3D virtual elements. And of course I anticipate many updates in the world of Machine Learning, so that we can track fingers and the human body more precisely, which will make it easier to make virtual 3D objects part of our world.

Thanks Kavin for sharing your journey and vision for the future of our AR Community! Can’t wait to see what you come up with next!